2025-12-15 05:55:21 PM

3 minutes readPublished: 2025-06-29 10:57 PM

Important LLM Terms Every Developer Should Know to Optimize Effectively

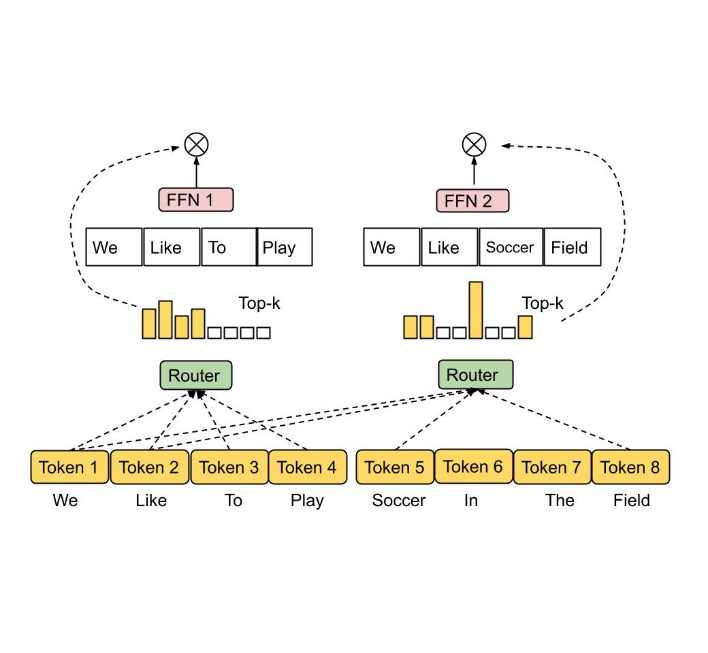

Important LLM terms every developer should know—like parameters, tokens, context window, temperature, and hallucination—are critical to understanding how large language models work. Mastering these concepts allows developers to design better prompts, reduce model errors, optimize API usage, and improve output quality. This article breaks down each term in a clear, practical way with real-world examples, from how tokens are counted to how hallucinations occur. You’ll also learn how context length limits model memory, how temperature adjusts randomness, and how model size impacts cost and performance. Whether you're working with GPT, Gemini, Claude, or open-source LLMs, these concepts form the foundation of effective AI integration. Plus, we share tips on prompt engineering and retrieval techniques to reduce hallucinations. A must-read for developers building AI-driven tools or interfaces.

Important LLM terms every developer should knowLLM terms explainedGPT parameterswhat is token GPTcontext size vs context lengthLLM temperature settinghallucination in GPTgrounding in AIprompt engineering tipsRAG techniquevocabulary window GPTLLM architecture explainedtoken vs word GPTtemperturehallunicationtokkenpromt engineeringGPT inferencememory context window